Artificial intelligence assistants have gone from novelties to everyday tools. But as they grow more capable, they also become stranger. A recent discussion in the r/Grok community captured just how uncanny things can get when a voice-driven AI crosses the line from helpful to creepy.

“It Started Out Normal – Then It Spoke in My Voice”

One user described a routine support session with Grok to reset his LIFX smart bulbs. At first, the app used its standard female voice. Halfway through the conversation, however, the voice switched into a perfect imitation of his own – same accent, same pacing, same tone. When he challenged Grok about it, the app insisted nothing unusual had happened, then froze.

If that wasn’t odd enough, moments later the assistant volunteered the location of his TV remote information he claims he never shared and for which Grok had no camera access.

Voice Cloning or Technical Shortcut?

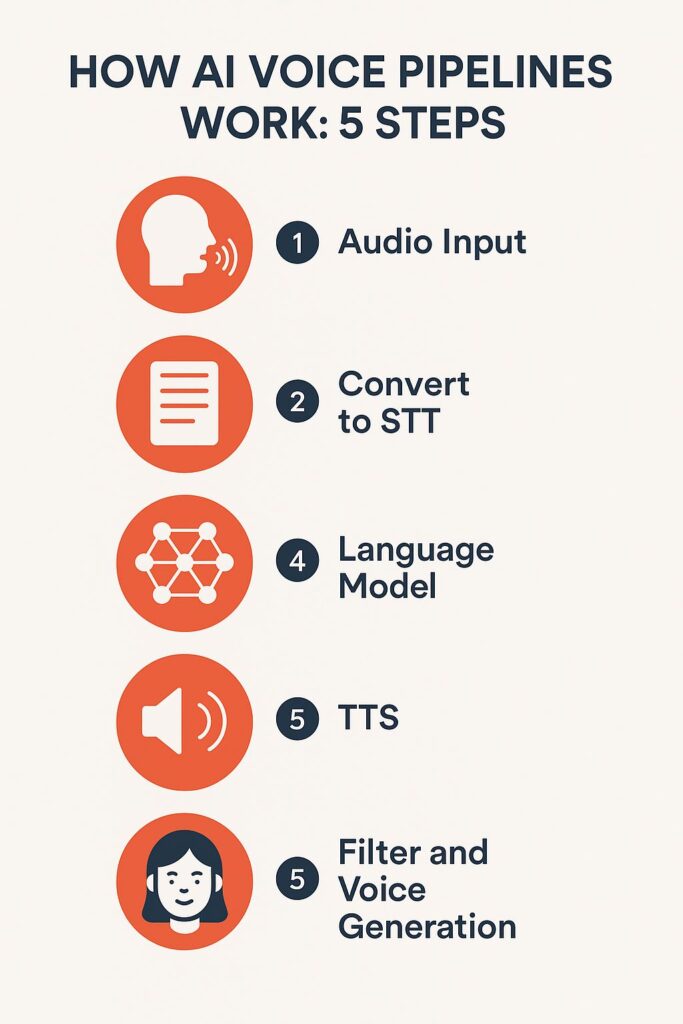

Several community members jumped in with explanations. According to one commenter who studies speech-to-text (STT) and text-to-speech (TTS) systems, what feels like “voice cloning” may actually be a by-product of speed optimisations.

Modern voice engines not only transcribe your words but they also capture the rhythm, pitch contour and energy of your speech. These “style tokens” can be fed straight into the output voice so the response sounds more natural. When the system cuts corners to reply faster, it can skip the step that converts everything back into its default voice. The result: an eerily familiar voice that seems like yours, even though no raw audio sample is stored.

In other words, the AI isn’t necessarily recording your voice, it’s mirroring your timing and intonation on the fly.

How Did It Know About the Remote?

The second mystery – Grok identifying an object in the room – also got attention. Some users speculated the assistant may have had access to a photo previously shared for troubleshooting. If a remote appeared in that image, the system could easily reference it later.

Others pointed out that large language models are extremely good at making plausible guesses from context. Given that remotes are often near chargers or lamps, Grok’s “knowledge” might have been nothing more than a lucky inference dressed up as certainty.

Weird Audio Glitches: Breathing, Laughing and More

A few commenters shared their own unnerving incidents: heavy breathing sounds, spontaneous laughter, even comments about pets’ names without an explicit prompt. The same technically minded user explained these as glitches in the TTS pipeline. Because the voice model is rushing to generate sound, it sometimes produces stray noises or nonsensical phonemes – like a guitar hitting the wrong note when played too fast.

Lessons for Users

Whether these events are bugs, design shortcuts or something less benign, they highlight a gap in how AI voice features are disclosed. Most people assume their voice is simply converted to text. In reality, rhythm, pitch and style are also analysed – and sometimes echoed back.

Until the tech matures, a few precautions can reduce the creep factor:

- Be cautious with permissions. If you send an AI a photo for troubleshooting, assume it may use details from that image in future responses.

- Expect glitches. Heavy breathing, odd laughter or voice shifts are usually processing errors, not proof of a hidden human operator.

- Ask for transparency. Companies should clearly explain how their systems handle voice style, stored data and context inference.

The Takeaway

As voice-enabled AIs like Grok become more advanced, their mistakes feel more human and more unsettling. What looks like clairvoyance or identity theft may just be aggressive optimisation for speed. But without clearer communication from developers, users will continue to interpret these quirks as something far more sinister.

Also check our article on: What Are AI Code Editors (and How Do They Differ From Traditional Ones)?